1. Introduction

For simple applications I still use the same old architecture for a few years. Although it isn’t particularly fancy or cutting-edge, it has consistently proven to be effective for small projects or startups

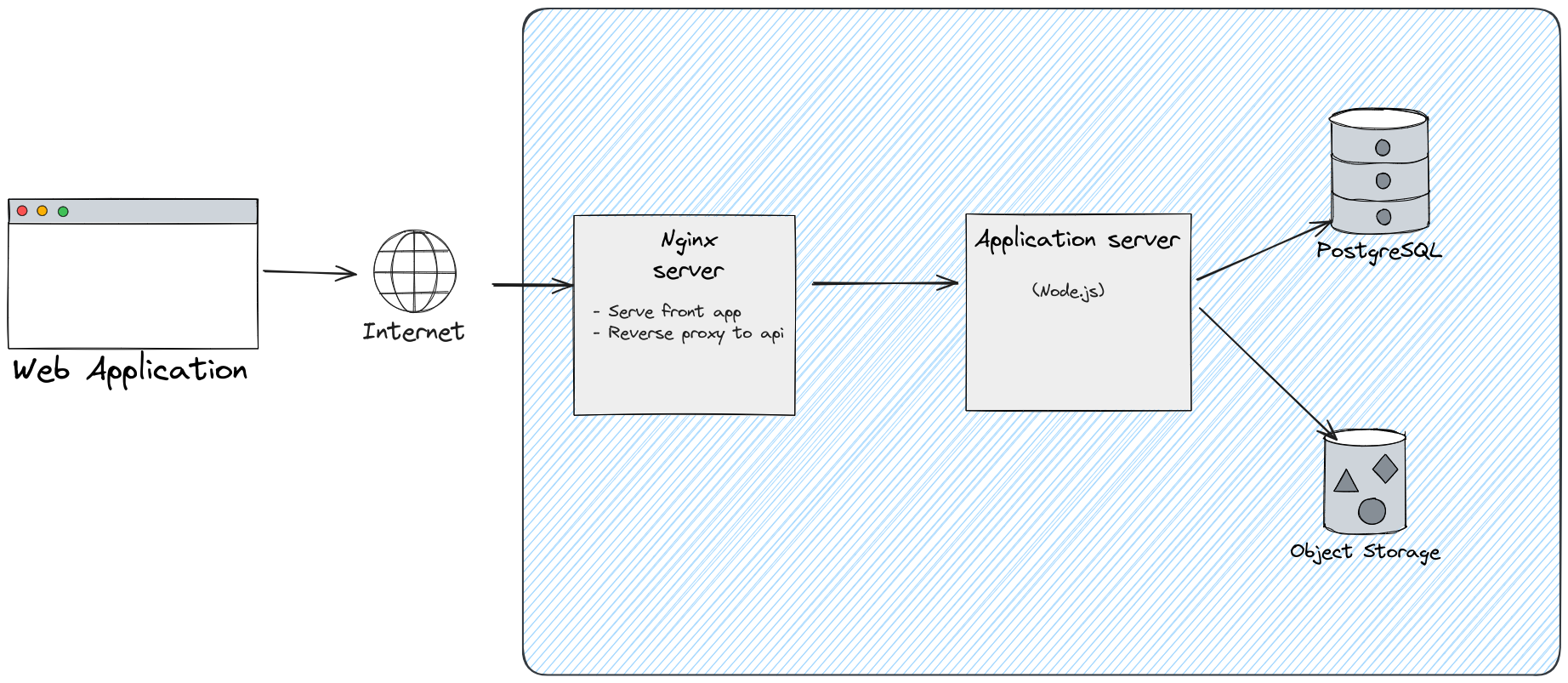

The Nginx server serves static files like HTML, CSS, and JavaScript while acting as a reverse proxy to route API requests to the Node.js application server. The app server processes business logic and handles data requests, interacting with a PostgreSQL database for relational data and an Object Storage system for large, unstructured files like media assets.

This setup ensures efficient handling of both structured and unstructured data, high performance for frontend delivery, and a clean separation of concerns. It requires minimal infrastructure or operations work and skills, making it an excellent choice for small teams or startups. Additionally, it is highly portable and can be deployed almost anywhere, from cloud providers to local servers, without complex dependencies or configurations.

As a French developer, I’ve found Clever Cloud to be an excellent solution for this type of architecture. It provides an easy-to-use platform with managed services for Node.js, PostgreSQL, and object storage, requiring minimal DevOps expertise. Clever Cloud’s focus on automation, scalability, and reliability makes it a perfect choice for small projects or startups looking to deploy quickly without worrying about infrastructure complexity. Plus, being a European provider ensures compliance with local regulations and offers excellent support.

The need

For a few recent applications with international ambitions, I found myself needing edge computing capabilities to ensure low latency and a seamless user experience worldwide. This is why I decided to give Fly.io a try. Its built-in global load balancing and edge deployment features could made it an excellent fit for this architecture.

2. Core Concepts of Fly.io

What is Fly.io?

Fly.io is a fully-featured Platform-as-a-Service (PaaS) with built-in edge computing capabilities, designed for global-scale deployments. It allows applications to be deployed across 35 regions worldwide, automatically routing user requests to the nearest instance for minimal latency. Fly.io features a powerful global private networking system that securely connects all your deployed instances, allowing seamless communication between services in different regions.

Fly.io is a versatile PaaS that offers a broad range of capabilities beyond its core edge computing features. It supports managed databases, including multi-region PostgreSQL deployments. Fly.io also integrates object storage through Tigris.

For applications requiring intensive computational resources, Fly.io provides GPU-enabled instances, ideal for tasks such as AI/ML workloads, video processing, and scientific computations. The platform also includes persistent volumes for stateful workloads, custom TLS certificates for secure communication and CLI tools for seamless developer workflows.

How it's work

The core principle of Fly.io are Fly Machines (VM) on which we are able to deploy applications though a Dockerfile

Fly.io operates differently from traditional container platforms. It doesn’t run containers directly. Instead, as explained in this detailed blog post, Fly.io uses containerd to unpack Docker images into filesystems. These filesystems are then mounted onto Firecracker microVMs, which execute the application in a secure, isolated environment. This innovative approach combines the developer-friendly workflow of Docker with the performance and security benefits of VMs, eliminating the need for a full container runtime.

A Fly Machine boots remarkably fast, in approximately 300ms, making it an ideal solution for autoscaling scenarios or quickly recovering from crashes. This speed ensures minimal downtime and rapid scaling to handle fluctuating workloads effectively

3. Deployment Example

Example: Frontend Build and Nginx Deployment

This section demonstrates how to deploy a frontend application on Fly.io, using a pnpm monorepo and turbo for build optimizations.

Fly configuration

The fly configuration is simple, as Nginx requires minimal resources to serve static files

fly.toml

## See https://fly.io/docs/reference/configuration/ for information about how to use this file.#app = "<your_app_name>"primary_region = 'cdg'[build]dockerfile = 'Dockerfile'[http_service]internal_port = 80force_https = trueauto_stop_machines = 'stop'auto_start_machines = truemin_machines_running = 1processes = ['app'][[vm]]memory = '256MB'cpu_kind = 'shared'cpus = 1

Nginx Configuration

The Nginx configuration (nginx.conf) is customized to proxy API requests through Fly.io's private network. The Fly.io DNS resolver fdaa::3 facilitates internal service discovery, and the backend API is targeted via its internal address (http://<your_api_name>.internal).

nginx.conf

server {listen 80;location / {root /usr/share/nginx/html;index index.html;try_files $uri $uri/ /index.html;}location /api/ {resolver [fdaa::3]; # The custom Fly.io DNS serverproxy_pass http://<your_api_name>.internal:<your_api_internal_port>;rewrite /api/(.*) /$1 break;proxy_set_header Host $host;proxy_set_header X-Real-IP $remote_addr;proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;proxy_set_header X-Forwarded-Proto $scheme;proxy_buffer_size 128k;proxy_buffers 4 256k;proxy_busy_buffers_size 256k;proxy_request_buffering off; # Disable request buffering for large file uploadsclient_max_body_size 50M;}gzip on;gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript;}

The /api/ location block handles proxying API requests with Fly.io’s private networking, while the root block serves the frontend assets.

Build Step: Multi-Stage Dockerfile

The Dockerfile leverages a multi-stage build with pnpm and turbo for optimizing the monorepo workflow. Turbo’s prune step ensures only relevant files and dependencies are included in the final image.

Dockerfile

# syntax = docker/dockerfile:1# Adjust versions as desiredARG NODE_VERSION=23FROM node:${NODE_VERSION}-slim AS base# Adjust versions as desiredARG PNPM_VERSION=9.13.2ARG TURBO_VERSION=2.3.3ARG FRONT_APP_PACKAGE=<@example/front-package_name>LABEL fly_launch_runtime="<name_your_app>"WORKDIR /usr/src/appRUN corepack enable && corepack prepare pnpm@$PNPM_VERSION --activateRUN npm install turbo@$TURBO_VERSION --globalRUN pnpm config set store-dir ~/.pnpm-store# Pruner stage. cf https://turbo.build/repo/docs/reference/pruneFROM base as prunerCOPY . .RUN pnpm turbo prune --scope=$FRONT_APP_PACKAGE --docker# Builder stageFROM base AS builder# Install packages needed to build node modulesRUN apt-get update -qq && \apt-get install --no-install-recommends -y build-essential node-gyp pkg-config python-is-python3# Install depsCOPY /usr/src/app/out/pnpm-lock.yaml ./pnpm-lock.yamlCOPY /usr/src/app/out/pnpm-workspace.yaml ./pnpm-workspace.yamlCOPY /usr/src/app/out/json/ .RUN pnpm install# Build applicationCOPY /usr/src/app/out/full/ .RUN\VITE_SOME="$(cat /run/secrets/SOME)" \VITE_REQUIRED="$(cat /run/secrets/REQUIRED)" \VITE_SECRET="$(cat /run/secrets/SECRET)" \pnpm run build:front# Final stage for app imageFROM nginx# Copy built applicationCOPY /usr/src/app/front/dist /usr/share/nginx/htmlCOPY front/nginx.conf /etc/nginx/conf.d/default.conf# Start the serverEXPOSE 80CMD [ "/usr/sbin/nginx", "-g", "daemon off;" ]

This Dockerfile performs the following steps:

Base Stage: Prepares the environment with pnpm and turbo

Pruner Stage: Uses turbo to prune unnecessary files from the monorepo

Builder Stage: Installs dependencies and builds the frontend

Final Stage: Copies the built assets to an Nginx container for deployment

CI/CD with GitHub Actions

The CD pipeline is straightforward, using the superfly/flyctl-actions/setup-flyctl GitHub Action to deploy the frontend application:

fly-deploy-front.yml

# See https://fly.io/docs/app-guides/continuous-deployment-with-github-actions/name: Fly Deploy Fronton:push:branches:- mainjobs:deploy:name: Deploy front appruns-on: ubuntu-latestconcurrency: deploy-front-groupsteps:- uses: actions/checkout@v4- uses: superfly/flyctl-actions/setup-flyctl@master- run: >flyctl deploy --config front/fly.toml --remote-only--build-secret SOME=${{ secrets.SENTRY_DSN }}--build-secret REQUIRED=${{ secrets.OP_KEY }}--build-secret SECRETS=${{ secrets.AUTH_DOMAIN }}env:FLY_API_TOKEN: ${{ secrets.FLY_FRONT_TOKEN }}// ...

This pipeline ensures fast and secure deployments by integrating secret management and Fly.io’s CLI tools directly into the GitHub workflow

And, That's it, pretty simple, right?

4. Costs

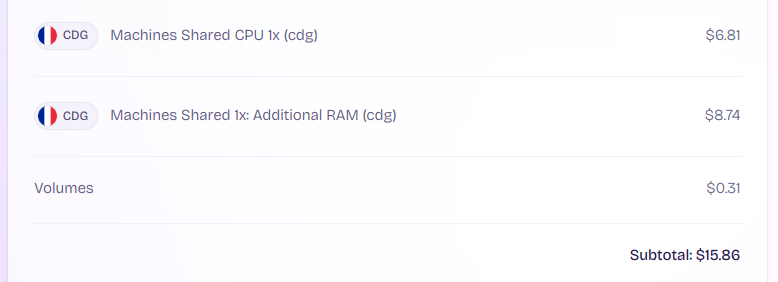

Fly.io is cost-effective, making it an attractive option for developers and small teams. For a development platform implementing the simple architecture described above, the monthly cost is approximately $16

Conclusion

Fly.io is a highly effective and versatile solution

It perfectly addresses the requirements of the use case introduced in the introduction

Its scalability makes it adaptable to other use cases

Fly.io offers a scalable, versatile solution that meets the requirements of global applications. Fly.io’s edge capabilities and developer-friendly tools make it an outstanding choice for modern web applications.

However, for use cases that don’t demand edge computing, Clever Cloud remains a strong contender due to its regional compliance and local support